Increasing Speed and Accuracy Through Text Mining and Natural Language Processing in the Insurance Industry

November 3, 2020

In this interview, Antoine LY, Head of Data Science at SCOR Global Life, illustrates how text mining and Natural Language Processing (NLP), benefit insurers and the insured through the policy lifecycle and how partnering with a powerful data science team rooted in the reinsurance business is the right choice for insurers looking to leverage this technology.

Could you give us a brief understanding of what Text Mining / Natural Language Processing (NLP) is all about?

Data can take many forms, and our ability to exploit data depends largely on our ability to identify the individual elements, and then manipulate them to gain insight and make inferences. Numerical or tabular data are perhaps most easily exploited data available to us as there is little variability in their presentation. When examining textual data, however, the process of identification and subsequent extraction for examination becomes more complicated as text (language) is highly unstructured.

Both Text mining and NLP refer to text manipulation using algorithms, and the subsequent analysis of that textual data:

- Specifically, text mining involves the identification and extraction of individual elements of text as data. A textual data point can be a character, word, sentence, paragraph, or even a full document.

- NLP refers to the capacity of mimicking the human reading process which leverages text mining, but with a higher level of sophistication, as it endeavors to capture the complexity of the language and, in turn, translate it into summarized information that a computer can use. Incidentally, one of the most powerful NLP algorithms created to date, is only roughly one year old: Bidirectional Encoder Representation for Transformers (BERT). You may have heard about this coming from Google researchers in 2018.

It’s important to stress at the outset that for both text mining and NLP to be possible, the ability to separate and extract individual textual data points is essential. It is usual that text is digitized through scan or is simply presented as a digital image. In that case, the use of Optical Character Recognition (OCR) is required. In brief, OCR is the electronic or mechanical conversion of images of typed, handwritten, or printed text into machine-encoded text, whether from a scanned document or an image.

Can you provide a specific example of how NLP will help insurers manage their book of business?

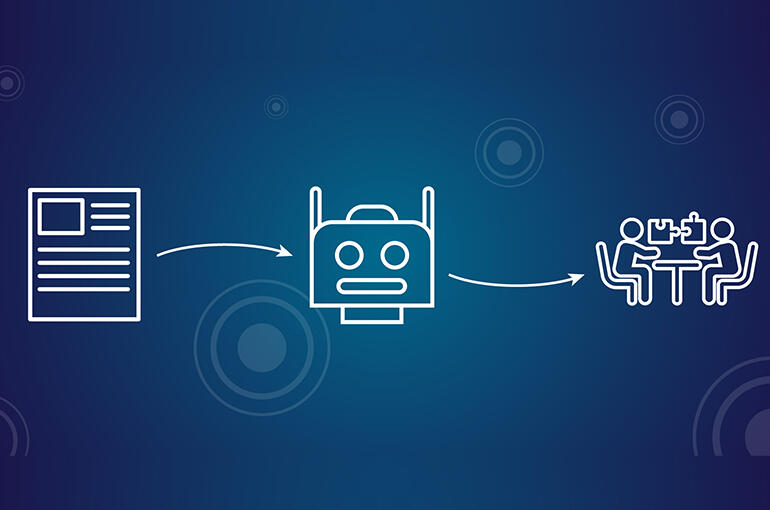

Imagine you want to know how many pandemic exclusions you have across your entire book of business - without NLP and technology you must open all your scanned documents, check each one meticulously for every exclusion and ensure you have identified all of them and without error. This process would consume tremendous time and places accuracy at risk. With NLP the task can be automated as each document would be ‘electronically read’ for text data matches compared to an algorithmic library and you will have your answer quickly, and accurately.

More generally, what other applications could NLP and text mining be leveraged to solve key pain points for insurers?

We can consider a few key points along a policy life cycle, beginning at Underwriting. At the (re)insurance level, underwriters analyze a significant number of policies at each renewal period and as such are mired in documents. However, once a contract is digitized, by scanning as an example, several NLP applications are possible. These applications include tracking changes in clauses and checking compliance, which reduce friction in the underwriting process.

Several other opportunities are presented when leveraging text mining in life insurance, such as enhancing medical report analysis. Using NLP, we can extract key information from medical reports thereby reducing the processing time for more standard cases and enabling underwriters to focus on the most difficult or complex ones.

In the claims phase, using this automation can simplify the evaluation of claims in process by reducing treatment time, minimizing operational errors, or even helping in fraud detection. Text mining can likewise be used in claims adjudication by simplifying their classification and facilitating the subsequent routing to the appropriate department.

When it comes to ‘reserving’, digital analysis of the comments on claim reports during the ‘First Notification of Loss’ or ‘Expert Claims Assessment’ stage is becoming progressively more developed as insurers strive to improve their reserving accuracy for severe claims. Descriptions can likewise provide valuable information which will enable insurers to better anticipate how a claim will develop and, in turn, better estimate the expected cost. In life insurance, the automated review of expert reports could likewise help in reserve projection. As an example, such a review could help in long-term care or specific critical illness products where risk factors can be cross correlated to different dependent diseases. Despite the low annual frequency of such reports, the amount of documentation can become significant over a lengthy study period, and an automated approach to text analysis is highly beneficial.

The benefit to insurers seems clear, but how does that translate down to benefit the insured?

From a pure insurance perspective, automated policy checks will offer consumers more transparency on the coverage of their policies from one period to another. Additional benefits are linked to the advantages which NLP present in claims processing and adjudication. Simply put, thanks to the implementation of NLP within the insurance industry, the insured will experience faster response times and increased accuracy of their claims as they go to adjudication.

An ancillary consumer benefit of NLP, outside of the immediate insurance universe, comes from NLP-supported Enhanced Medical Diagnostics. Using NLP to review multiple relevant documents, doctors can ensure they cover all possible diseases that could match a patient’s symptoms. Of course, early and accurate diagnosis is key to ensuring good patient health, and certainly to avoiding complications in the event of illness. There is a clear benefit in this regard to both insurance consumers and, by extension, we as (re)insurers.

Why should an insurer consider SCOR vs. other software designers, tech vendors or consultancies to design and implement their Text Mining / NLP capabilities?

As (Re)insurance is our core business, we are keenly aware of the various possible insurer pain points that can be addressed through Artificial Intelligence and Machine Learning projects such as text mining and NLP. Unlike more generalist providers of this technology, our roots in the insurance industry place us in the best position to partner strategically with clients to address their needs as insurers. Our unique blend of actuarial excellence at the core of risk, combined with a powerful and agile Data Analytics Solutions Team of Business Integrators, Data Value Creators, Data Scientists, and Data Engineers, makes SCOR an invaluable partner to implement such projects within an insurance context.

Every project is different, with specific unique requirements, how can SCOR assure successful implementation across NLP projects with varying customer needs?

While specific requirements will vary across both customer needs and the various insurance disciplines of underwriting, claims analysis, fraud detection, and so forth, there are some steps that are common when it comes to manipulating text. For this reason, our team developed a proprietary library to facilitate the use of text mining and NLP, within an insurance context. This SCOR library reflects each of the standard steps needed to process text and is based on open source libraries and python codes as well as some additional customized features that help dealing with different languages and make usage uniform.

|

More about SCOR’s Data Analytics Solutions team The SCOR Data Analytics Team is a unique group of data experts, risk analysts, and business developers all working together to achieve rapid and customized product deliveries for customers. The team relies on an innovation mindset and state-of-the art collaborative technologies: - To amplify our innovative capacity, we created our Data Analytics Solutions Platform (DASP), a unified programming development and prototyping ecosystem. This unique collaborative environment is a virtual forum where professionals from our actuarial, data, underwriting, and medical teams work together with customer-input from business developers to share knowledge and innovate. The DASP is built upon state-of-the art technologies that facilitate fast and innovative products for our customers. - In an agile way, the team and each client meet at regular touch points to check compliance with requirements and constraints such as data privacy regulations. We partner with our clients throughout the project lifecycle to ensure our models and products are ready to use in production within our clients’ IT systems. The SCOR Data Analytics Team’s ability to cover the full insurance value-chain is demonstrated by a palette of more than 25 delivered projects spanning AI-supported data capture, Accelerated Underwriting, and Enhanced insurance management, among others. |

How can I learn more from SCOR about text mining / NLP for insurers?

More detailed information and technical deep dives into the process and application of text mining / NLP can be obtained by downloading our detailed white paper on the subject or by contacting the SCOR Data Analytics Solutions Team.

Stay tuned for the next topic we will cover, Optical Character Recognition, and future white papers on related subjects from the SCOR Data Analytics Solutions Team.